Here’s how I respond to "have we tested everything?"

OH: "How can we be sure we have tested everything?". That's the neat part, you don't.

I used to get really frustrated when people asked me this question. The person asking is looking for something I cannot give them, even though I would like to give them this gift: certainty. This made me feel frustrated with the testing profession and the person asking the question.

I'd go into "lecturing mode", which probably came across as quite defensive. It never accomplished much. The question asker felt attacked by me, and I felt like I couldn't "defend" testing well enough.

These days, I have found a new way of handling this question. I now see it as a signal. The person who poses this question doesn't know the strengths and limitations of software testing.

Instead of immediately launching in a lecture about testing, I simply ask a question in return: What does testing everything mean to you?

The last time I had the pleasure of receiving this question, this lead to the question asker going "uhhhh, well, I just want to be sure that when we go live there will be no problems. I currently don't have a good feeling about it at all. I want [the testers] to sign off on this. If we go live and there are problems, they will be at the bottom of the list because they signed off on it."

Woah. This gave me a ton more information to work with. A couple of red flags, too.

First off, I don't like the us (devs) versus them (testers) mentality. That's not how we should roll. It sounds like this person wants to cover their ass by putting all the responsibilities of issue-finding on the testers. No good will come of going down this blame route.

I said that, in my experience, all code has bugs. Of course, we do our best to find most of them by employing risk-based testing in the limited time we have. It's a shared effort.

The question asker was also looking for a certain feeling, that testing will not provide. We can never be completely sure that there will be no problems in production after go-live. I'd rather pose the opposite: it's more realistic to expect problems, and being able to fix them rather swiftly should be the priority. We have monitoring in place, that will surely help us spot certain problems, I told the question asker.

In the never-ending quest to find new information to inform our understanding of the software we are making, a good tester uses a risk-based approach. Likewise, an experienced tester knows that there are always unknowns. With exploratory testing, we try to uncover information in those areas, but there's never enough time to cover them all.

I'm under no illusion that I completely overhauled this person's idea of testing, but I tried to lead the discussion away from feelings and ass-covering to the more practical realm. What can we (devs && testers) do before go-live to find the most pressing problems? Can we easily spot unforeseen problems after go-live? Do we have techniques in place to bring fixes to production easily?

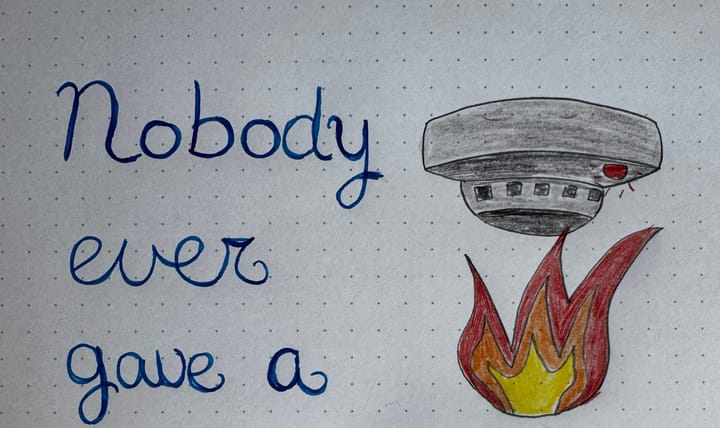

The question, have we tested everything, is interesting to me, but not very practical. It's like chasing a rainbow. It's a good signal, though, to explore what other people think testing can and cannot do.

How do you respond to the question?

Comments ()